Fin Sunday Edition #2

Our weekly recap of what got the team behind Fin talking this week

“We can’t not change, we will die”

Des and Ethan Kurzweil sat down last week for a great fireside chat. They talked about how Intercom has roared back from the brink, and why it was necessary to push the company through a lot of hard changes, fast.

In this clip, Des talks about why most AI demos are terrible, whether product people are “all that important”, and why we can’t keep building software the same way anymore (which he also covered recently here).

Full conversation below:

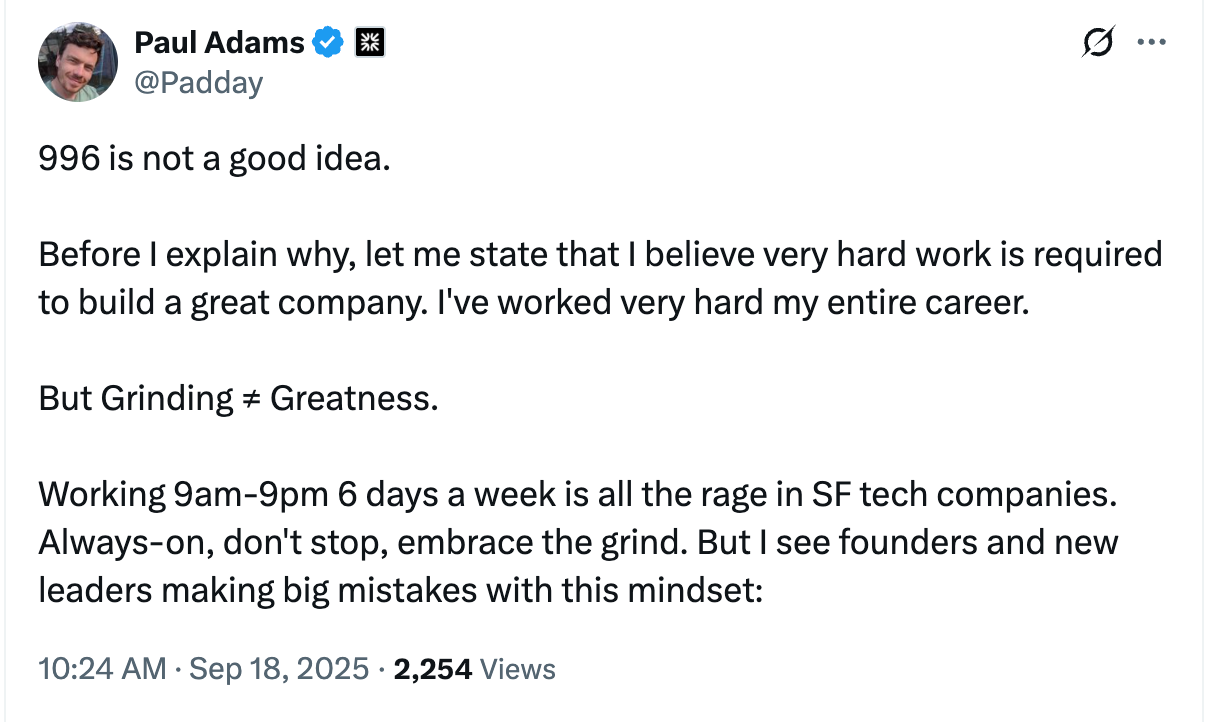

“Grinding ≠ Greatness”

Next, Paul got us thinking about how, at a human level, you can prepare yourself to do all that work to make things change and produce breakthrough thinking.

He reflected on the trend of working “996” - that’s 12 hours a day, from 9am to 9pm, 6 days a week - and challenged the wisdom of grinding for its own sake. Sometimes sleep and thinking time are necessary to produce work that’s going to break through the grind and lead to greatness.

Episodes 1 and 2 from the Fin AI Podcast

The first two episodes of the Fin Podcast from our AI Group dropped:

For Episode 1, Chief AI Officer Fergal Reid interviewed Staff ML Scientist Fedor Parfenov and talked about how he brought verifiable insights to support teams when building Copilot, our AI tool to support human agents — and what he’s interested to try in the next few months.

On Episode 2, Fergal sat down with Principal Machine Learning Engineer Pratik Bothra about the work he’s doing at Fin, and why we don’t just let LLMs do all the heavy lifting in machine learning products.

And ICYMI — here’s yesterday’s recap post about our recent Building Frontier AI Products event, which featured Fergal and more members of the AI Group.

Next week we’ll look at a few of the talks in more detail.

Different rules for us and AI?

As sometimes happens, there was a common theme to many of the links flying around Slack this week:

Should there be one set of rules for humans and another for AI?

The AI Group loved this satirical take from Scott Alexander at Astral Codex Ten on why we apply different rubrics to human learning and AI learning.

It hit home because we’ve all seen the tendency to judge humans and AI by different standards — but in customer service, human reps make dumb mistakes all the time. They give wrong answers, they bullshit, they evade. The resolution rate of humans is not 100%.

So while people are judging today's AI compared to a superhuman standard, they ignore how strangely similar AI’s quirks and cognitive biases are to our own.

This line of thinking also made us go back to some old, but still relevant, thoughts from DHH on why it’s easier to forgive a human than a bot — something Paul wrote about for this newsletter a few months ago.

Deconstructing aggregation theory

Jonny Burch was at Hatch (alongside our VP of Design Emmet, whose talk he references) and did a deep dive into the bundling of roles in product design.

“Not only can a designer build prototypes and even production code with AI support but also ideate radical — and until now time‑consuming — new directions in minutes with the latest image models and creative tools.”

Morgan Beller wrote up a similar take in AI Is Like Water

“The harder your tech is to copy, the longer it will be until that moment arrives. But it always arrives. It arrived for genome sequencing, where costs have plummeted. It is arriving for batteries for electric vehicles. And if it happens for those “hard” technologies, it’s definitely going to happen for ones where copy/paste exists and open sourcing is a thing.”

We’re seeing these kinds of shifts everywhere. Chris from our CX team wrote about his own experience of role expansion and the change in expectations that comes with it — being asked to be an AI expert, getting executive attention you never had before, re-making an organization before there’s infrastructure or knowledge in place. Unrealistic asks that might not fit your use case, all tied up with the ambiguous fear of job loss for those around you.

But that’s fear talking. Instead, Chris points out all the good parts of these heightened expectations:

Suddenly, you will have your executives’ attention, more access to product and engineering resources than ever before, and you won’t need to spend nearly as much time convincing other stakeholders why the change you’re requesting is best for customers and the company.

We’re also seeing evidence from Fin customers like the compliance automation Vanta, who say their support teams are able to take on more complex work, which means they’re able to hire for more specialized support roles in the future.

(Starts at 18:17)

Our parting thought

The big AI change is already here, but it might be true that — counter to some of the prevailing anxieties we hear — all of this change will actually lead to even more interesting, challenging, creative work for humans.

See you next week!