AI native product thinking

Do old product frameworks still apply?

Somebody recently asked me if Jobs-to-be-Done still makes any sense in an AI era, and how design thinking fits in, and basically “what about us Des?, no one consulted us on all this change.”

This is my best current guess at an answer.

Jobs-to-be-Done means a lot of things to a lot of people. I think of it as the following:

an interview style where you focus not on the product but the problem the user has, decomposed into functional, status, and $3rd

a diagnosis/write-up style where you use the the research to uncover the chain of events that leads to the user evaluating and purchasing the product (aka The Switch), and how they gauge their satisfaction with it

All of this is very applicable in AI, but the level of abstraction you work at really matters.

You might hear “I need to log in every week to file my expenses” and think that’s the job, but that’s far too zoomed in to be useful in the AI era. These product boundaries ain’t what they used to be. AI is a strategically aggressive technology. It ignores boundaries and expands relentlessly.

For decades we’ve been used to the boundary walls of our products being defined by things like different buyers, different sets of data, vastly different amounts of product features to be built, different UI, and different integrations. That’s why there are lots of different products for sales, marketing, and support all making the same “single source of truth” claims.

But in a post-AI world, we’re realising that SaaS features are a lot easier to build, that the UI is starting to converge on text inputs (which is better for the user who no longer needs to learn your UI), and that MCP servers make it easier to crawl across different parts of the tech stacks to get things done.

In short, the old product borders are dead and gone and the young are turning grey.

Applying JTBD to AI

First and foremost, you need to apply Jobs to be Done at a process level, not a feature level or even a product level.

To do this, you almost certainly need to go up the org chart: talking to your current buyer’s boss, or boss’s boss.

Here’s a simple (and simplified) example:

Expense tracking is a function by which we buy software and hire people to ensure that every dollar spent on behalf of the company adheres to our policies. It’s run every month, the inputs to it are credit card bills, receipts, and the company’s expense policy, and its outputs are updates to payroll to return expenses and highlighted discrepancies to ensure validation.

You can think of it like

ExpenseTracker(bills, receipts, policy ) = {payroll updates, discrepancies}The outputs will be graded against things like “is this better/faster/cheaper than our current function”.

You then ask yourself: where, if anywhere, must I use humans in this function?

This is not something you can decide without a lot of validation. Maybe the answer to the question is nowhere. Or maybe it’s only at the edge cases, or at one very last authorization step.

This is a decision your AI group makes, part of a conversation between 3 stakeholders — you as product leader deciding “is this a significant problem worth solving”, your AI team determining “can we reliably do this at high enough quality?”, and your customers as the ultimate decider of “what do we actually want”.

Once you’ve worked all that out, then — and not before — you are free to open Figma.

Will we ever have job boards again?

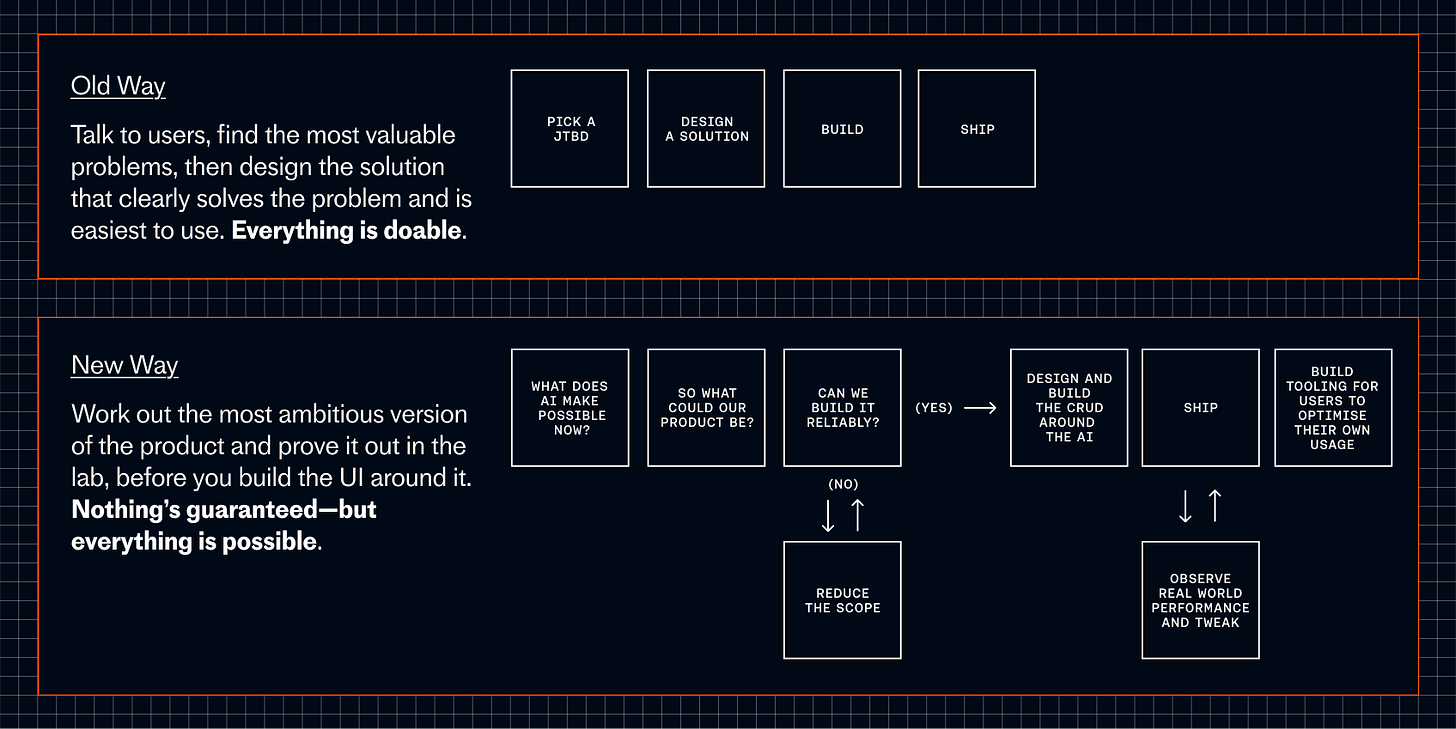

If the old world was “the PM picks the highest value job to be done, works with design on a solution and ships it” — then the new world might look more like this:

Learn what relevant challenges can AI solve that apply to your domain

Identify products or product features that seem ripe for attack

Test if AI can completely solve the challenge end-to-end with strong evaluation

If Yes proceed

If no Reduce Scope

Once you have AI working, design & build the product around it

Ship to the wild, observe, and improve

This is my best current guess. The one thing that’s certain is that things will be messy, so your mileage will vary.

Like I said, it’s all different these days.