A tale of two Agent Builders

What two competing solutions to the same design problem tell about the future of designing AI interfaces.

On Monday this week OpenAI announced their new Agent Builder product at their annual Dev Day event. I watched this announcement with interest—not least because on Thursday this week we announced a similar product at our annual Pioneer event: a new Fin feature called Procedures.

What’s most interesting to me though, is that both teams approached the design of these similar products from almost diametrically opposite directions:

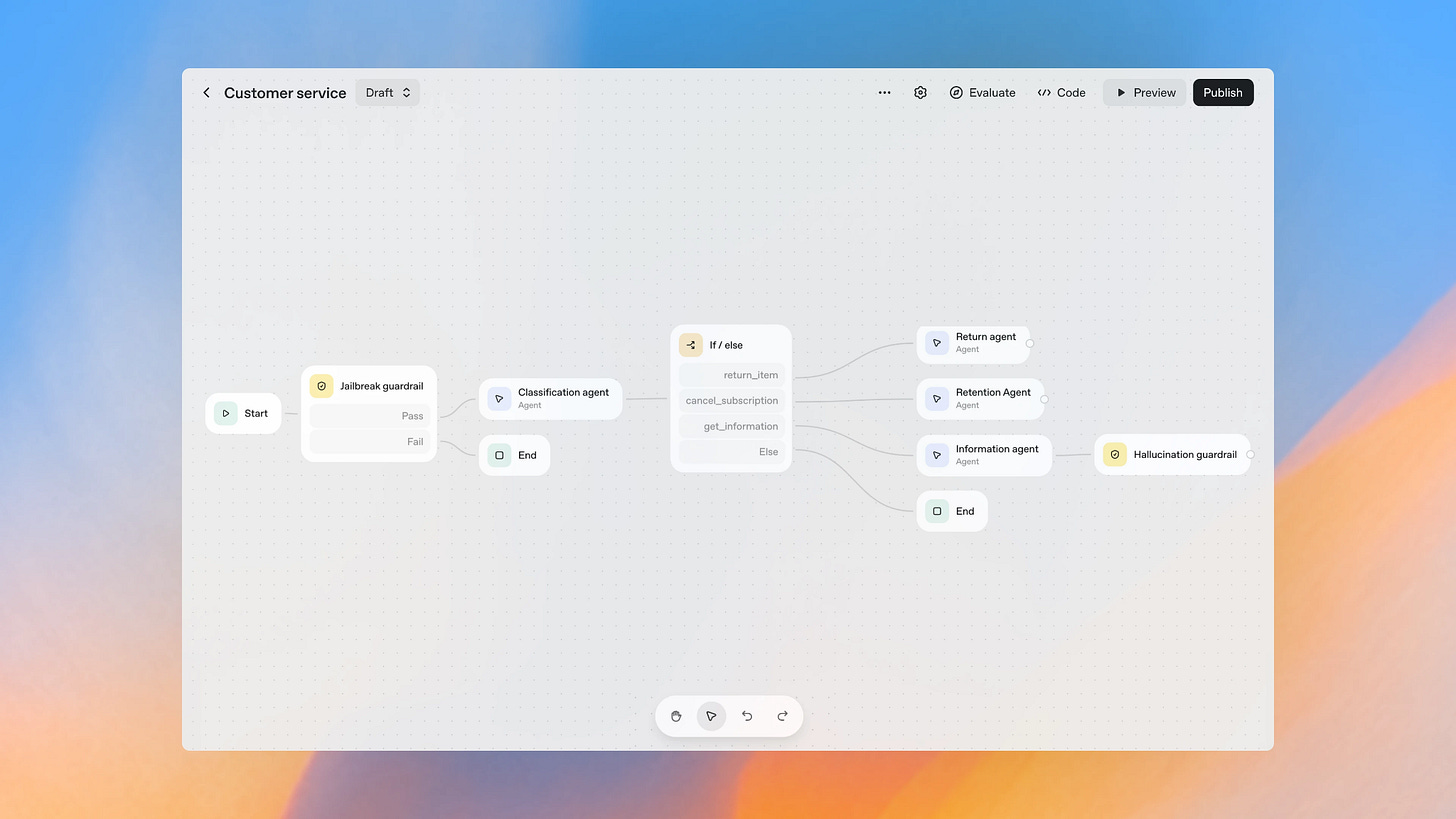

Agent Builder is a canvas-based editor for setting up A.I. agents

Procedures is a document-based editor for setting up A.I. agents

At first blush this might appear to be nothing more than an aesthetic preference: are you more of a visual thinker or a language person? (Or, if you prefer, a shape rotator or wordcel?)

However, I think there’s a lot more to it than this. In fact the thing that compels me to write about this is that I believe this comparison neatly illustrates the overriding interface design puzzle of the A.I. era:

How do we successfully combine deterministic and probabilistic interfaces?

For the uninitiated:

Deterministic systems are where the same input always produces the same output: binary, predictable, if-then-else logic. For decades, the software we have designed has been deterministic.

Probabilistic systems, on the other hand, are fuzzier. The outputs they produce are different every time, even when given the same input—as happens when you prompt an AI model. Designing for these systems does not involve certainty, but likelihoods.

The emergence of AI has obviously pushed software dramatically towards the latter category, and we’re still collectively figuring out the appropriate UI design patterns here. But both approaches have their pros and cons, and it’s becoming increasingly clear that we will need to figure out how to combine the best of both worlds. I therefore believe that the primary user interfaces of the future will be the ones that most gracefully blend deterministic and probabilistic patterns together.

What might that look like? Let’s take a closer look at both OpenAI’s Agent Builder and Fin’s Procedures in search of an answer.

OpenAI Agent Builder

The “nodes and noodles” canvas-based UI design pattern is well-established. There is a lot of prior art here going back a long time, from Quartz Composer to Max/MSP to MS Visual Workflow to Scratch, and all the way up to new AI agent workflow builders like Zapier and n8n. It’s stood the test of time as an accessible UI pattern for a “no code” logic builder.

But this approach is primarily and innately deterministic. The whole point of the pattern is that it allows you to visually map out an if-then-else workflow of logic gates and responses.

This may be part of why (I think it is fair to say) the initial reaction to OpenAI’s announcement felt somewhat mixed: it borrows heavily from classic patterns that seem more relevant in a deterministic world.

Now look, there are a lot of things to consider on balance here. Maybe the more technical Dev Day audience were expecting something more expressive and powerful. Maybe it’s because it was (impressively? tellingly?) built in just six weeks, and they have big plans for where to take it next; I certainly wouldn’t bet against OpenAI, one of the finest product teams around.

But there’s something here that, for me, doesn’t quite fit.

It’s hard to know how these fixed workflows will handle a lot of the doubling-back and stream-crossing that inevitably happens in real-life scenarios. The larger and more complex these workflows become, the more difficult they become to maintain; spaghetti no-code. It also just seems quite limiting to squeeze these massively-capable agentic systems through narrow logic gates. Unsurprisingly, LLMs work well with language!

I also will not pretend that I don’t have a horse in this race! But I can also say that we’re already very familiar with the canvas-based approach, used in Intercom’s own mature Workflows feature. Which was in part why we consciously decided to take a different approach when designing Procedures…

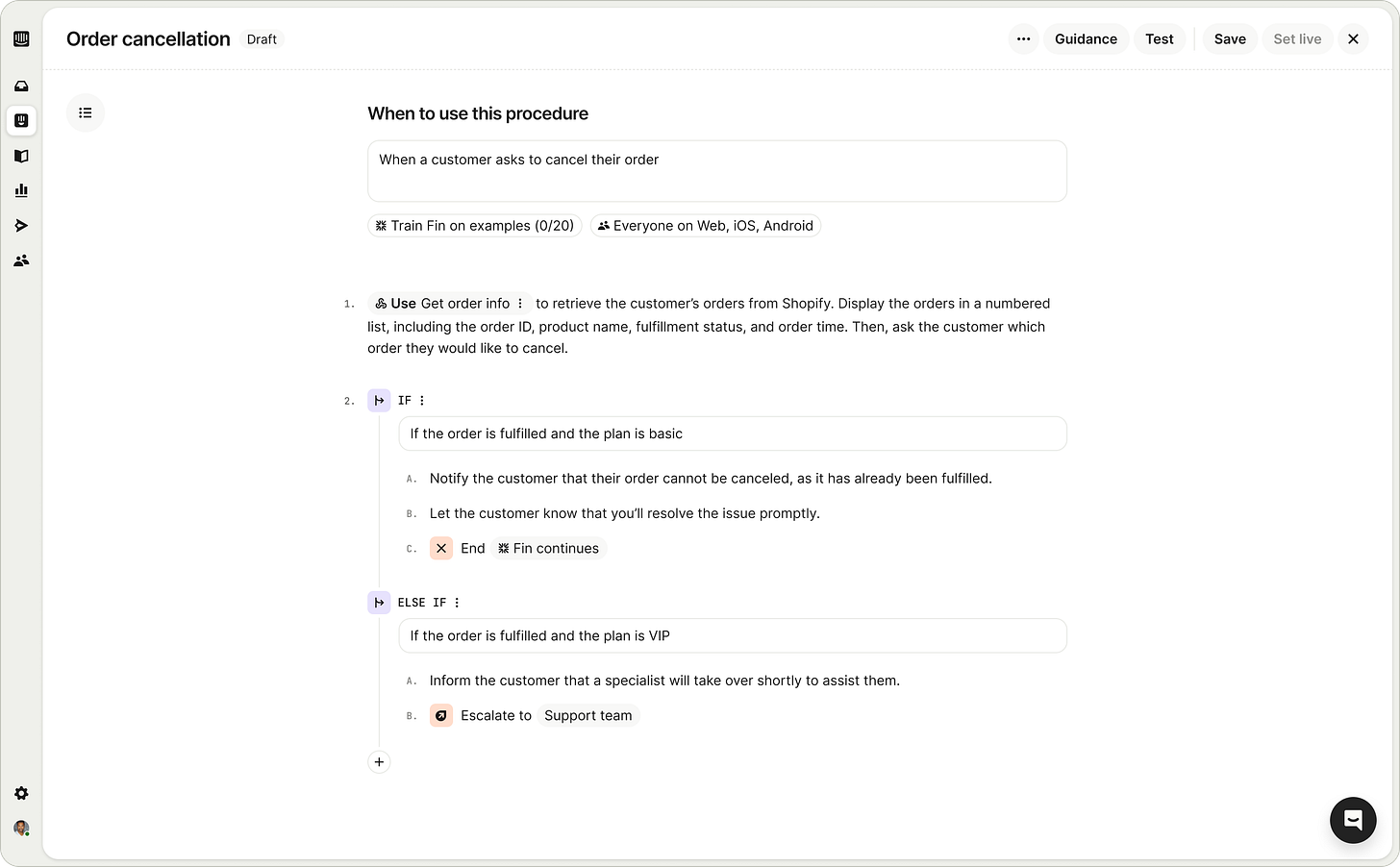

Fin Procedures

Whereas Agent Builder takes its inspiration from canvas-based UIs, Procedures could be said to feel more like a Jupyter Notebook or even a Notion doc. It’s clearly a document. But they all belong to the genus of notebook interfaces, which infuse text documents with embeddable sections of logic or controls that elevate them beyond plain text.

I’m reminded of Stephen Wolfram’s aspiration of “programming in which one is as directly as possible expressing computational thinking—rather than just telling the computer step-by-step what low-level operations it should do.” We speak our own language, and now computers can too, so it seems compelling that this be a more suitable starting point.

If you’re creating a Fin Procedure, you can start by literally just describing what you want Fin to do in natural language English. But you can then also add deterministic instructions inline, as part of the document itself: Branching Logic for conditional statements, Data Connectors for fetching third-party data, and Code Blocks for when you want a specific expression.

This blended approach allows for progressive complexity, but only when you need it. You can start with a simple, straightforward set of English-language instructions that fit best with probabilistic systems. But you can then also drill down into providing fine-grained deterministic rules when appropriate. It’s a neat combination of agentic and specific.

I believe this is quite an innovation for blending probabilistic and deterministic instructions in one place. (BTW if you can think of examples of other UIs that also do this I’d love to hear from you!)

So which will win out?

OpenAI are building a generic multi-agent system for a large, possibly consumer-leaning audience. Whereas Fin is a single agent focused on a much more specific use case of being a Customer Agent for businesses. So there are differences that may be relevant here.

There are also likely multiple viable routes to wherever this new type of interface ends up. We’re all still just searching for the ways in here. I wouldn’t be surprised if we started to see more probabilistic blocks appear inside of Agent Builder, just like there are deterministic blocks inside Procedures. We’ll all collectively figure it out in the end. Don’t worry, software will be fine!

A visual builder may even seem more approachable at first. But right now I’d argue that mapping out all your fixed logic flows, and filling in all the many little attribute fields with input.output_parsed.classification==”flight_info” just to get something basic working is actually harder to wrap your head around. With Procedures we’ve seen lots of early customers start by just copy and pasting in their existing internal docs for handling tricky support questions.

A probabilistic-first system actually allows you to skip a lot of the complexity inherent in a visual builder. You don’t need to worry about all of the branching logic, or exceptions, or looping back. Procedures does this for you by leaning on the LLM’s judgement, its agentic ability to take a set of high level instructions and figure out the right thing to do in a variety of slightly different situations. This is also a near-certain bet on these core mode capabilities only improving in the future.

All new UI paradigms pick a starting point and evolve from there. It’s a fun question to consider whether canvas-based or document-based is a better starting point for agent builders.

But again, this is just the starting point. The high order question here is how successfully we can blend these different modes of deterministic and probabilistic systems from here into something new and better: a fundamentally better way of computing.

You can learn more about Procedures and the other new Fin 3 features we announced this week here, and watch the full Pioneer keynote here.

What an amazing read - thank you sharing. Going to feature this on aiverse [dot] design

good read - amazed by what was the thought process behind building fin.